本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.ldbm.cn/database/60333.html

如若内容造成侵权/违法违规/事实不符,请联系编程新知网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!相关文章

网络原理之IP协议(网络层)

目录

前言

什么是IP协议?

IP协议的协议头格式

16位总长度(字节数)

16位标识、3位标志位和13位片偏移

8位生存时间

IP地址管理

1.动态分配IP

2.NAT机制(网络地址转换)

NAT机制是如何工作的

NAT机制的优缺点…

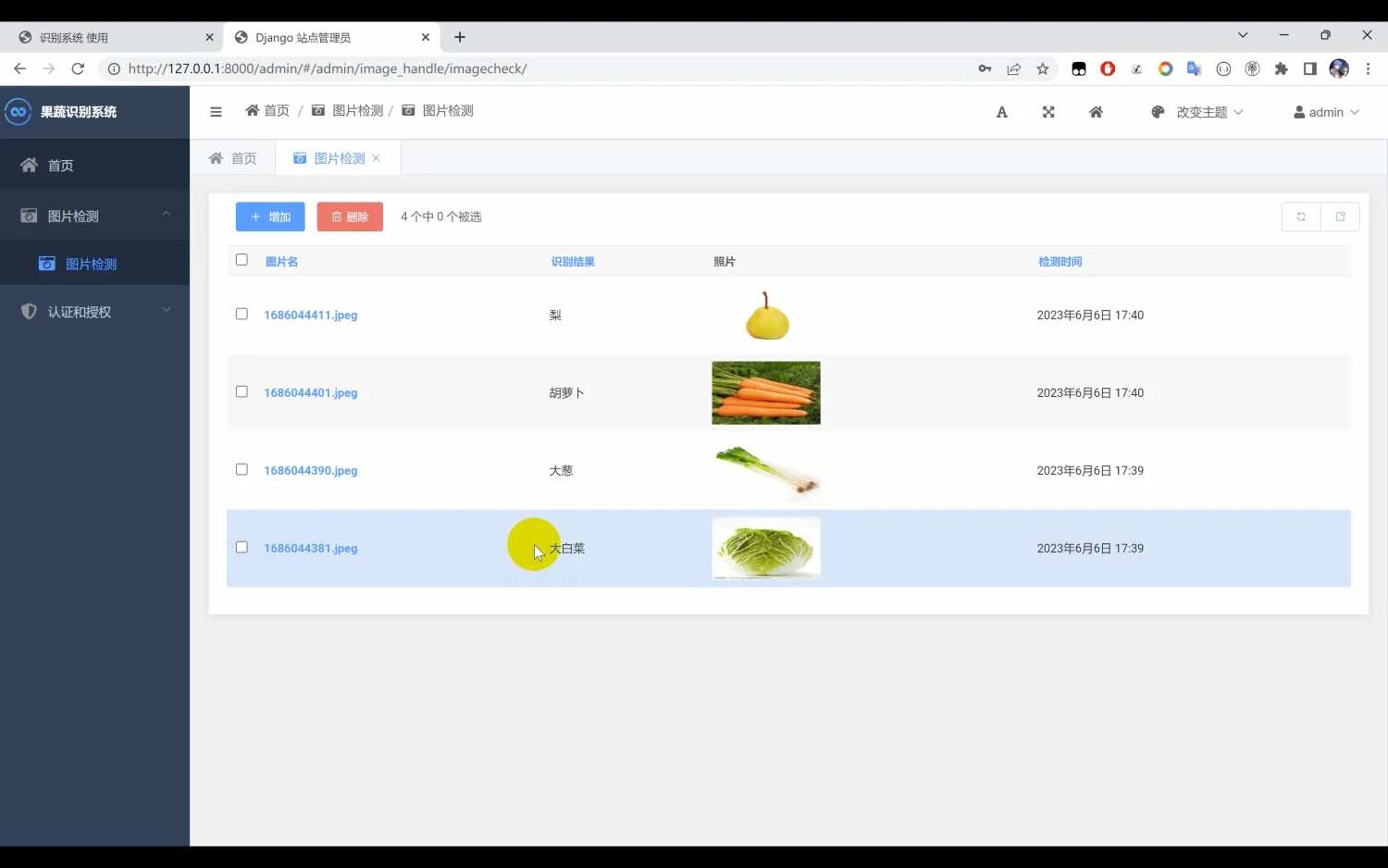

【果蔬识别系统】Python+卷积神经网络算法+人工智能+深度学习+计算机毕设项目+Django网页界面平台

一、介绍

果蔬识别系统,本系统使用Python作为主要开发语言,通过收集了12种常见的水果和蔬菜(‘土豆’, ‘圣女果’, ‘大白菜’, ‘大葱’, ‘梨’, ‘胡萝卜’, ‘芒果’, ‘苹果’, ‘西红柿’, ‘韭菜’, ‘香蕉’, ‘黄瓜’)…

【计网】从零开始学习http协议 --- http的请求与应答

如果你不能飞,那就跑; 如果跑不动,那就走; 实在走不了,那就爬。 无论做什么,你都要勇往直前。 --- 马丁路德金 --- 从零开始学习http协议 1 什么是http协议2 认识URL3 http的请求和应答3.1 服务端设计…

从 Tesla 的 TTPoE 看资源和算法

特斯拉的 ttpoe 出来有一段时间了,不出所料网上一如既往的一堆 pr 文,大多转译自 演讲 ppt 和 Replacing TCP for Low Latency Applications,看了不下 20 篇中文介绍,基本都是上面这篇文章里的内容,车轱辘话颠来倒去。…

深度学习经典模型之BERT(上)

深度学习经典模型之BERT(下) BERT(Bidirectional Encoder Representations from Transformers)是一个双向transformer编码器的言表示模型。来自论文:BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding 。由Google公司的…

Aegisub字幕自动化及函数篇(图文教程附有gif动图展示)(一)

目录 自动化介绍

bord 边框宽度

随机函数

fsvp

随机颜色

move 自动化介绍 自动化介绍:简单来说自动化能让所有字幕行快速拥有你指定的同一种特效 对时间不同的行应用相同的效果

只要设计好一个模板,然后让所有行都执行这个模板上的特效就好了

首先制作模板行…

双十一有哪些值得买?性价比超高的商品一览

随着双十一的脚步越来越近,这场令人热血沸腾的购物狂欢即将开启!为了让大家不在纷繁的商品海洋中迷失,悦悦细心梳理了一份购物清单,分享那些我亲自体验极佳,觉得务必入手的商品。

这些商品不仅价格实惠,而…

【Faster-Rcnn】训练与测试

✨ Blog’s 主页: 白乐天_ξ( ✿>◡❛) 🌈 个人Motto:他强任他强,清风拂山冈! 💫 欢迎来到我的学习笔记! 1.提前准备

1.1. mobaxterm(远程连接服务器)

链接:…

Dify 中的讯飞星火平台工具源码分析

本文主要对 Dify 中的讯飞星火平台工具 spark 进行了源码分析,该工具可根据用户的输入生成图片,由讯飞星火提供图片生成 API。通过本文学习可自行实现将第三方 API 封装为 Dify 中工具的能力。

源码位置:dify-0.6.14\api\core\tools\provide…

python爬虫:从12306网站获取火车站信息

代码逻辑

初始化 (init 方法): 设置请求头信息。设置车站版本号。 同步车站信息 (synchronization 方法): 发送GET请求获取车站信息。返回服务器响应的文本。 提取信息 (extract 方法): 从服务器响应中提取车站信息字符串。去掉字符串末尾的…

中国蚁剑(antSword)安装使用

antSword下载 antSword-Loader下载

作者:程序那点事儿 日期:2024/09/12 19:35 中国蚁剑(AntSword)是一款跨平台的开源网站管理工具,旨在满足渗透测试人员的需求。它是一个功能强大的工具,可以帮助用户管理…

OpenHarmony(鸿蒙南向)——平台驱动开发【PWM】

往期知识点记录: 鸿蒙(HarmonyOS)应用层开发(北向)知识点汇总 鸿蒙(OpenHarmony)南向开发保姆级知识点汇总~ 持续更新中……

概述

功能简介

PWM(Pulse Width Modulationÿ…

【推荐100个unity插件之34】在unity中实现和Live2D虚拟人物的交互——Cubism SDK for Unity

最终效果 文章目录 最终效果前言例子中文官网Live2d模型获取下载Live2D Cubism SDK for Unity使用文档限制unity导入并使用Live2D模型1、将SDK载入到项目2、载入模型3、显示模型4、 播放动画 表情动作修改参数眼神跟随看向鼠标效果部位触摸效果摸头效果摸头闭眼效果做成桌宠参考…

sicp每日一题[2.24-2.27]

2.24-2.26没什么代码量,所以跟 2.27 一起发吧。 Exercise 2.24 Suppose we evaluate the expression (list 1 (list 2 (list 3 4))). Give the result printed by the interpreter, the corresponding box-and-pointer structure, and the interpretation of this a…

奇瑞汽车—经纬恒润 供应链技术共创交流日 成功举办

2024年9月12日,奇瑞汽车—经纬恒润技术交流日在安徽省芜湖市奇瑞总部成功举办。此次盛会标志着经纬恒润与奇瑞汽车再次携手,深入探索汽车智能化新技术的前沿趋势,共同开启面向未来的价值服务与产品新篇章。 面对全球汽车智能化浪潮与产业变革…

EmguCV学习笔记 VB.Net 11.9 姿势识别 OpenPose

版权声明:本文为博主原创文章,转载请在显著位置标明本文出处以及作者网名,未经作者允许不得用于商业目的。 EmguCV是一个基于OpenCV的开源免费的跨平台计算机视觉库,它向C#和VB.NET开发者提供了OpenCV库的大部分功能。

教程VB.net版本请访问…

稀疏向量 milvus存储检索RAG使用案例

参考: https://milvus.io/docs/hybrid_search_with_milvus.md milvus使用不方便: 1)离线计算向量很慢BGEM3EmbeddingFunction 2)milvus安装环境支持很多问题,不支持windows、centos等 在线demo: https://co…

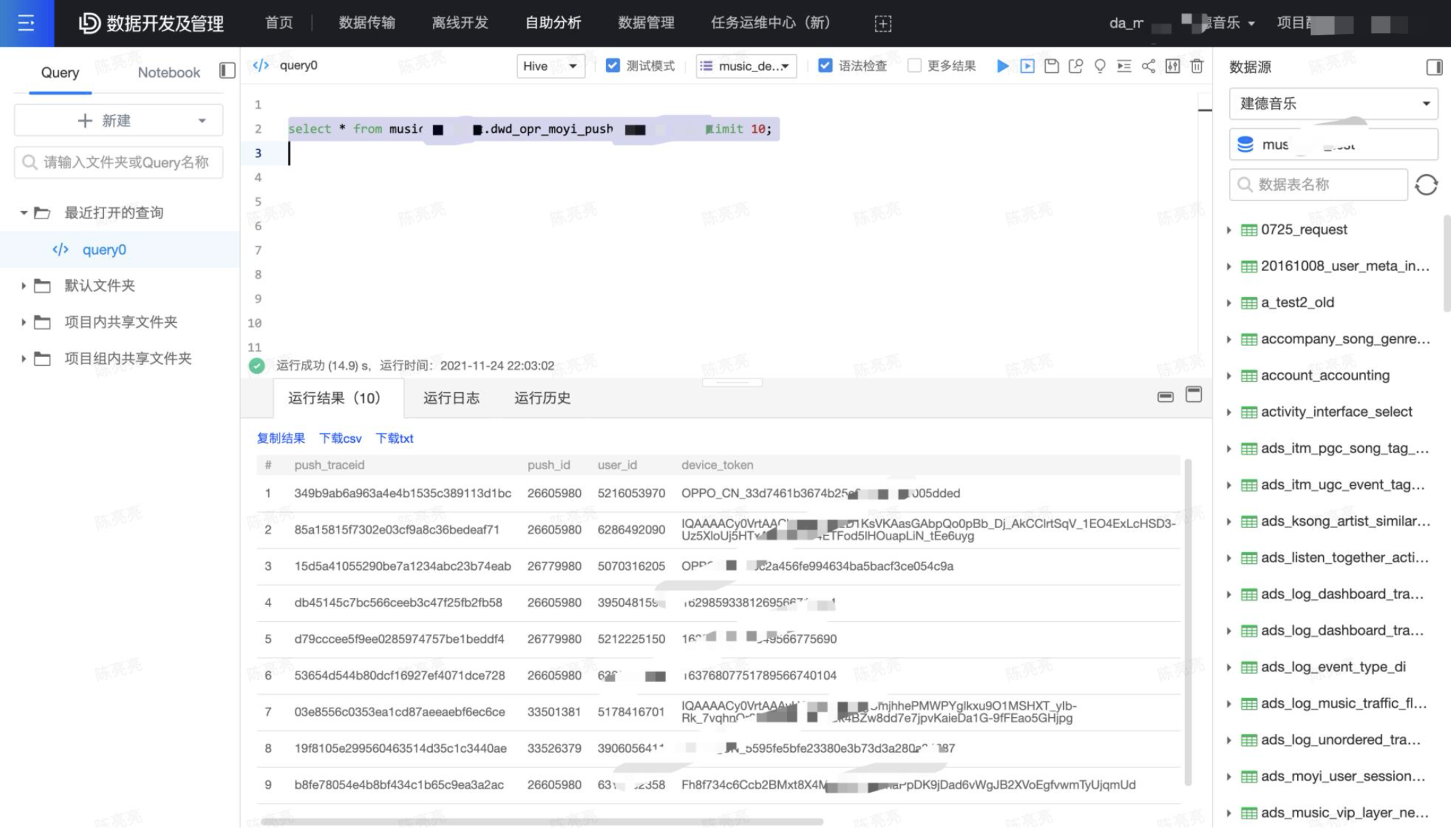

AI大模型助力数据消费,构建数据飞轮科学、高效的体系

随着互联网的技术高速发展,越来越多的应用层出不穷,伴随着数据应用的需求变多,为快速响应业务需求,很多企业在初期没有很好的规划的情况下,存在不同程度的烟囱式的开发模式,这样会导致企业不同业务线的数据…

【项目实战】如何在项目中基于 Spring Boot Starter 开发简单的 SDK

什么是SDK

通常在分布式项目中,类和方法是不能跨模块使用的。为了方便开发者的调用,我们需要开发一个简单易用的SDK,使开发者只需关注调用哪些接口、传递哪些参数,就像调用自己编写的代码一样简单。实际上,RPC(远程过…

![sicp每日一题[2.24-2.27]](https://i-blog.csdnimg.cn/direct/6f33a360fd7f47ebb6fd022c7cc0b395.png)