本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.ldbm.cn/database/92978.html

如若内容造成侵权/违法违规/事实不符,请联系编程新知网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!相关文章

【贪心算法第二弹——2208.将数组和减半的最小操作数】

1.题目解析 题目来源 2208.将数组和减半的最小操作数——力扣 测试用例 2.算法原理(贪心策略) 3.实战代码 class Solution {

public:int halveArray(vector<int>& nums) {priority_queue<double> hash;double sum 0.0;for(auto e : nums){hash.push(e);sum …

Transformer详解及衍生模型GPT|T5|LLaMa

简介

Transformer 是一种革命性的神经网络架构,首次出现在2017年的论文《Attention Is All You Need》中,由Google的研究团队提出。与传统的RNN和LSTM模型不同,Transformer完全依赖于自注意力(Self-Attention)机制来捕…

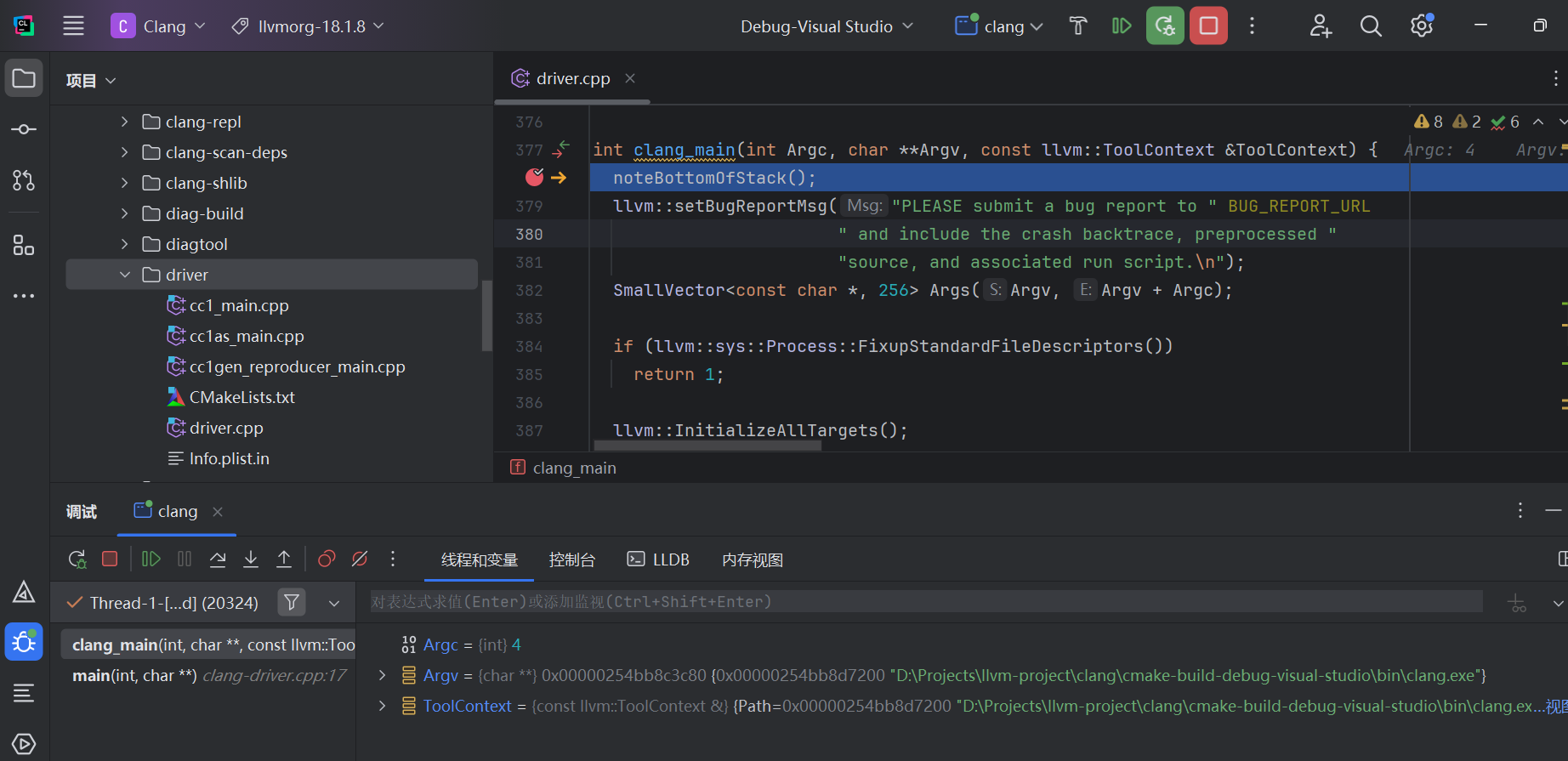

编译 LLVM 源码,使用 Clion 调试 clang

版权归作者所有,如有转发,请注明文章出处:https://cyrus-studio.github.io/blog/ 1. LLVM 简介

LLVM 是一个开源的编译器基础架构,最初由 Chris Lattner 于 2000 年在伊利诺伊大学开发,后来成为一个广泛应用于编译器和…

网络无人值守批量装机-cobbler

网络无人值守批量装机-cobbler

一、cobbler简介

上一节中的pxe+kickstart已经可以解决网络批量装机的问题了,但是环境配置过于复杂,而且仅针对某一个版本的操作系统进批量安装则无法满足目前复杂环境的部署需求。

本小节所讲的cobbler则是基于pxe+kickstart技术的二…

.NET9 - 新功能体验(三)

书接上回,我们继续来聊聊.NET9和C#13带来的新变化。 01、Linq新方法 CountBy 和 AggregateBy

引入了新的方法 CountBy 和 AggregateBy后,可以在不经过GroupBy 分配中间分组的情况下快速完成复杂的聚合操作,同时方法命名也非常直观࿰…

一加ACE 3 Pro手机无法连接电脑传输文件问题

先说结论:OnePlus手机无法连接电脑传输数据的原因,大概率是一加数据线的问题。尝试其他手机品牌的数据线(比如华为),再次尝试。

连接电脑方法:

1 打开开发者模式(非必要操作) 进入…

Parker派克防爆电机在实际应用中的安全性能如何保证?

Parker防爆电机确保在实际应用中的安全性能主要通过以下几个方面来保证:

1.防爆外壳设计:EX系列电机采用强大的防爆外壳,设计遵循严格的防爆标准,能够承受内部可能发生的爆炸而不破损,利用间隙切断原理,防…

【君正T31开发记录】8.了解rtsp协议及设计模式

前边搞定了驱动,先不着急直接上手撸应用层的代码,先了解一下大致要用到的东西。 设计PC端先用vlc rtsp暂时H264编码(vlc好像不支持h265,这个后边我试试)的视频流,先需要支持上rtsp server,了解rtsp协议是必…

MAC借助终端上传jar包到云服务器

前提:保证工程本地已打包完成:图中路径即为项目的target目录下已准备好的jar包 第一步:打开终端(先不要连接自己的服务器),输入下面的上传命令:

scp /path/to/local/app.jar username192.168.1…

探索Python自动化的奥秘:pexpect库的神奇之旅

文章目录 **探索Python自动化的奥秘:pexpect库的神奇之旅**一、背景:为何选择pexpect?二、pexpect是什么?三、如何安装pexpect?四、pexpect的五个简单函数五、pexpect在实际场景中的应用六、常见bug及解决方案七、总结…

4.SynchronousMethodHandler

前言

经过前面几篇文章的铺垫,这里终于到了正餐, 本篇我们将介绍SynchronousMethodHandler, 它作为同步请求的核心组件, 起到承上启下的功能

在介绍SynchronousMethodHandler, 之前我们先来看一下MethodHandler类

结构图 MethodHandler

它定义在InvocationHandl…

飞凌嵌入式旗下教育品牌ElfBoard与西安科技大学共建「科教融合基地」

近日,飞凌嵌入式与西安科技大学共同举办了“科教融合基地”签约揭牌仪式。此次合作旨在深化嵌入式创新人才的培育,加速科技成果的转化应用,标志着双方共同开启了一段校企合作的新篇章。

出席本次签约揭牌仪式的有飞凌嵌入式梁总、高总等一行…

【大语言模型】ACL2024论文-17 VIDEO-CSR:面向视觉-语言模型的复杂视频摘要创建

【大语言模型】ACL2024论文-17 VIDEO-CSR:面向视觉-语言模型的复杂视频摘要创建

VIDEO-CSR:面向视觉-语言模型的复杂视频摘要创建 目录 文章目录 【大语言模型】ACL2024论文-17 VIDEO-CSR:面向视觉-语言模型的复杂视频摘要创建目录摘要研究…

Linux线程(Linux和Windows的线程区别、Linux的线程函数、互斥、同步)

Linux线程(Linux和Windows的线程区别、Linux的线程函数、互斥、同步)

1. 线程介绍

线程的概念:

线程是 CPU 调度的基本单位。它被包含在进程之中,是进程中的实际运作单位。一条线程指的是进程中一个单一顺序的控制流࿰…

函数类型注释和Union联合类型注释

函数类型注释格式(调用时提示输入参数的类型):

)def 函数名(形参名:类型,形参名:类型)->函数返回值类型: 函数体 Union联合类型注释(可注释多种类型混合的变量)格式:

#先导入模块

from typing import…

微软正在测试 Windows 11 对第三方密钥的支持

微软目前正在测试 WebAuthn API 更新,该更新增加了对使用第三方密钥提供商进行 Windows 11 无密码身份验证的支持。

密钥使用生物特征认证,例如指纹和面部识别,提供比传统密码更安全、更方便的替代方案,从而显著降低数据泄露风险…

论文模型设置与实验数据:scBERT

Yang, F., Wang, W., Wang, F. et al. scBERT as a large-scale pretrained deep language model for cell type annotation of single-cell RNA-seq data. Nat Mach Intell 4, 852–866 (2022). https://doi.org/10.1038/s42256-022-00534-z

论文地址:scBERT as a…

多维高斯分布的信息熵和KL散度计算

多维高斯分布是一种特殊的多维随机分布,应用非常广泛,很多现实问题的原始特征分布都可以看作多维高斯分布。本文以数据特征服从多维高斯分布的多分类任务这一理想场景为例,从理论层面分析数据特征和分类问题难度的关系注意,本文分…

AWS codebuild + jenkins + github 实践CI/CD

前文

本文使用 Jenkins 结合 CodeBuild, CodeDeploy 实现 Serverless 的 CI/CD 工作流,用于自动化发布已经部署 lambda 函数。 在 AWS 海外区,CI/CD 工作流可以用 codepipeline 这项产品来方便的实现,

CICD 基本概念

持续集成( Continuous…

Android Studio更改项目使用的JDK

一、吐槽

过去,在安卓项目中配置JDK和Gradle的过程非常直观,只需要进入Android Studio的File菜单中的Project Structure即可进行设置,十分方便。

原本可以在这修改JDK:

但大家都知道,Android Studio的狗屎性能,再加…