本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.ldbm.cn/p/283326.html

如若内容造成侵权/违法违规/事实不符,请联系编程新知网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!相关文章

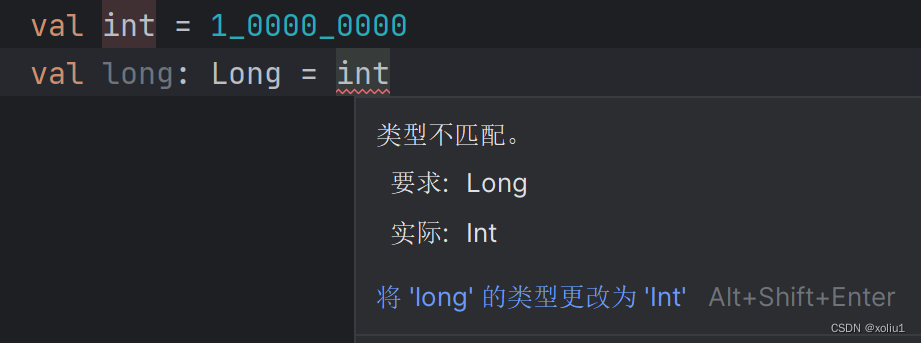

【Kotlin】基础变量、集合和安全操作符

文章目录 数字字面常量显式转换数值类型转换背后 位运算符字符串字符串模板修饰符数组集合(Kotlin自带)通过序列提高效率惰性求值序列的操作方式中间操作末端操作 可null类型安全调用操作符 ?.操作符 ?:非空断言操作符 !! 使用类型检测及自动类型转换安…

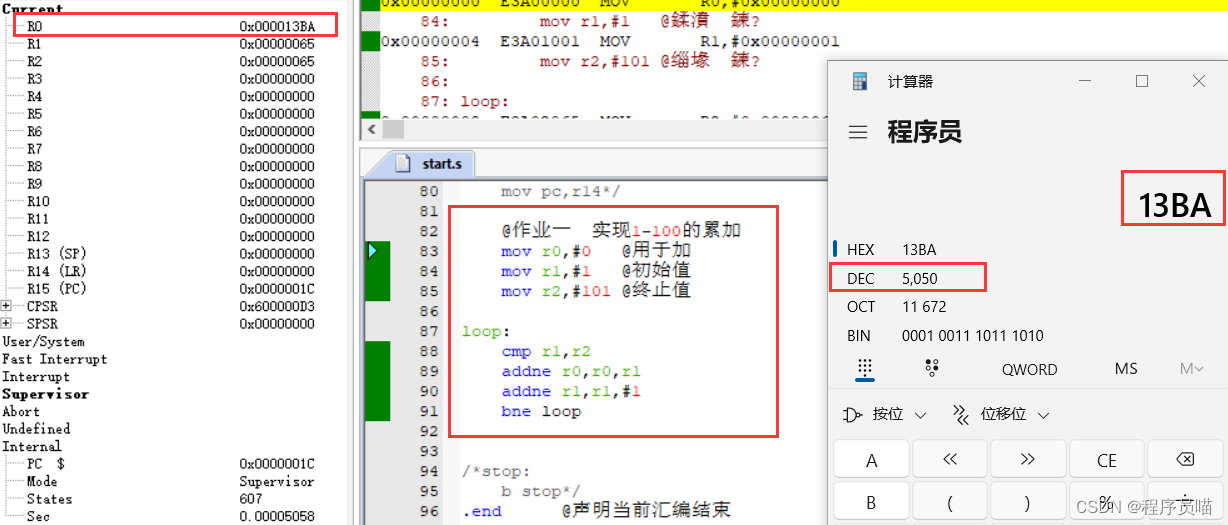

华清远见嵌入式学习——ARM——作业1

要求: 代码: mov r0,#0 用于加mov r1,#1 初始值mov r2,#101 终止值loop: cmp r1,r2addne r0,r0,r1addne r1,r1,#1bne loop

效果:

关于pycharm无法进入base界面的问题

问题:terminal输入activate无法进入base 解决方案 1.Cortana这边找到Anaconda Prompt右击进入文件所在位置 2. 右击进入属性 3. 复制cmd.exe开始到最后的路径 cmd.exe "/K" C:\ProgramData\anaconda3\Scripts\activate.bat C:\ProgramData\anaconda3 …

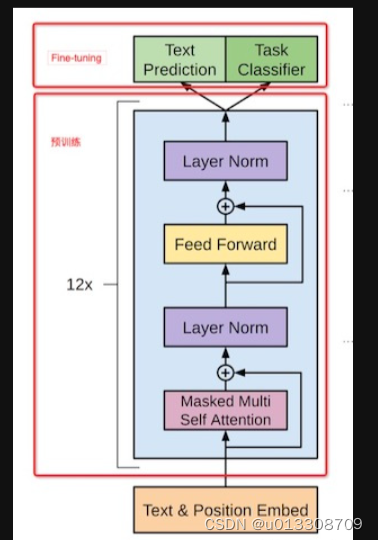

自然语言处理阅读第一弹

Transformer架构

encoder和decoder区别

Embeddings from Language Model (ELMO)

一种基于上下文的预训练模型,用于生成具有语境的词向量。原理讲解ELMO中的几个问题

Bidirectional Encoder Representations from Transformers (BERT)

BERT就是原生transformer中的Encoder两…

Java设计模式之七大设计原则

七大设计原则

设计原则概述 单一职责原则

定义

一个类仅有一个引起它变化的原因

分析 模拟场景

访客用户 普通用户 VIP用户

代码实现

/*** 视频用户接口*/

public interface IVideoUserService {void definition();void advertisement();

}/*** 访客用户*/

public class…

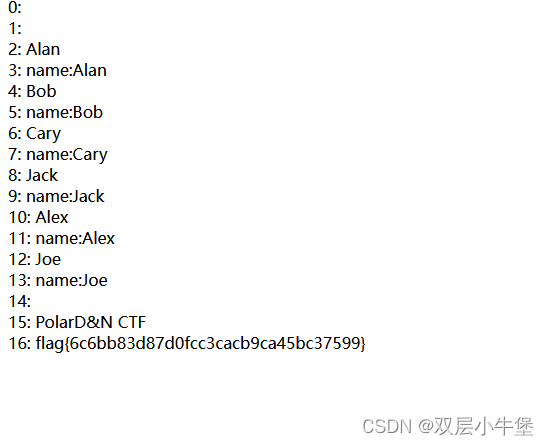

社交网络分析3:社交网络隐私攻击、保护的基本概念和方法 + 去匿名化技术 + 推理攻击技术 + k-匿名 + 基于聚类的隐私保护算法

社交网络分析3:社交网络隐私攻击、保护的基本概念和方法 去匿名化技术 推理攻击技术 k-匿名 基于聚类的隐私保护算法 写在最前面社交网络隐私泄露用户数据暴露的途径复杂行为的隐私风险技术发展带来的隐私挑战经济利益与数据售卖防范措施 社交网络 用户数据隐私…

Android用户目前面临的六大安全威胁

如今,各种出色的Android设备已能让我们无缝地利用生活中的碎片时间,开展各类工作、娱乐、创作、以及交流等活动。不过,目前随着越来越多的安全威胁在我们没注意到或看不见的角落里暗流涌动,时常会危及我们的数据、隐私、甚至是And…

2024年G2电站锅炉司炉证考试题库及G2电站锅炉司炉试题解析

题库来源:安全生产模拟考试一点通公众号小程序

2024年G2电站锅炉司炉证考试题库及G2电站锅炉司炉试题解析是安全生产模拟考试一点通结合(安监局)特种作业人员操作证考试大纲和(质检局)特种设备作业人员上岗证考试大纲…

HTML---CSS美化网页元素

文章目录 前言一、pandas是什么?二、使用步骤 1.引入库2.读入数据总结 一.div 标签: <div>是HTML中的一个常用标签,用于定义HTML文档中的一个区块(或一个容器)。它可以包含其他HTML元素,如文本、图像…

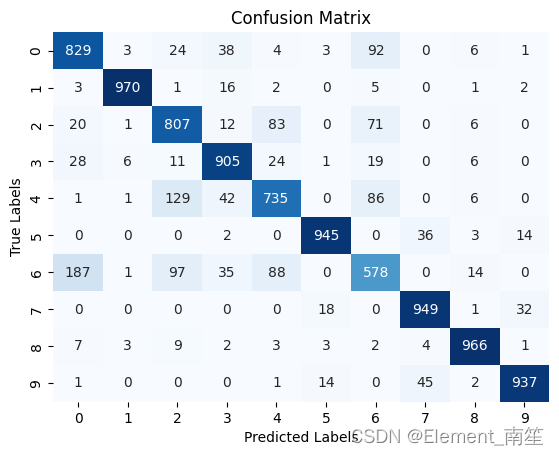

深度学习笔记_7经典网络模型LSTM解决FashionMNIST分类问题

1、 调用模型库,定义参数,做数据预处理

import numpy as np

import torch

from torchvision.datasets import FashionMNIST

import torchvision.transforms as transforms

from torch.utils.data import DataLoader

import torch.nn.functional as F

im…

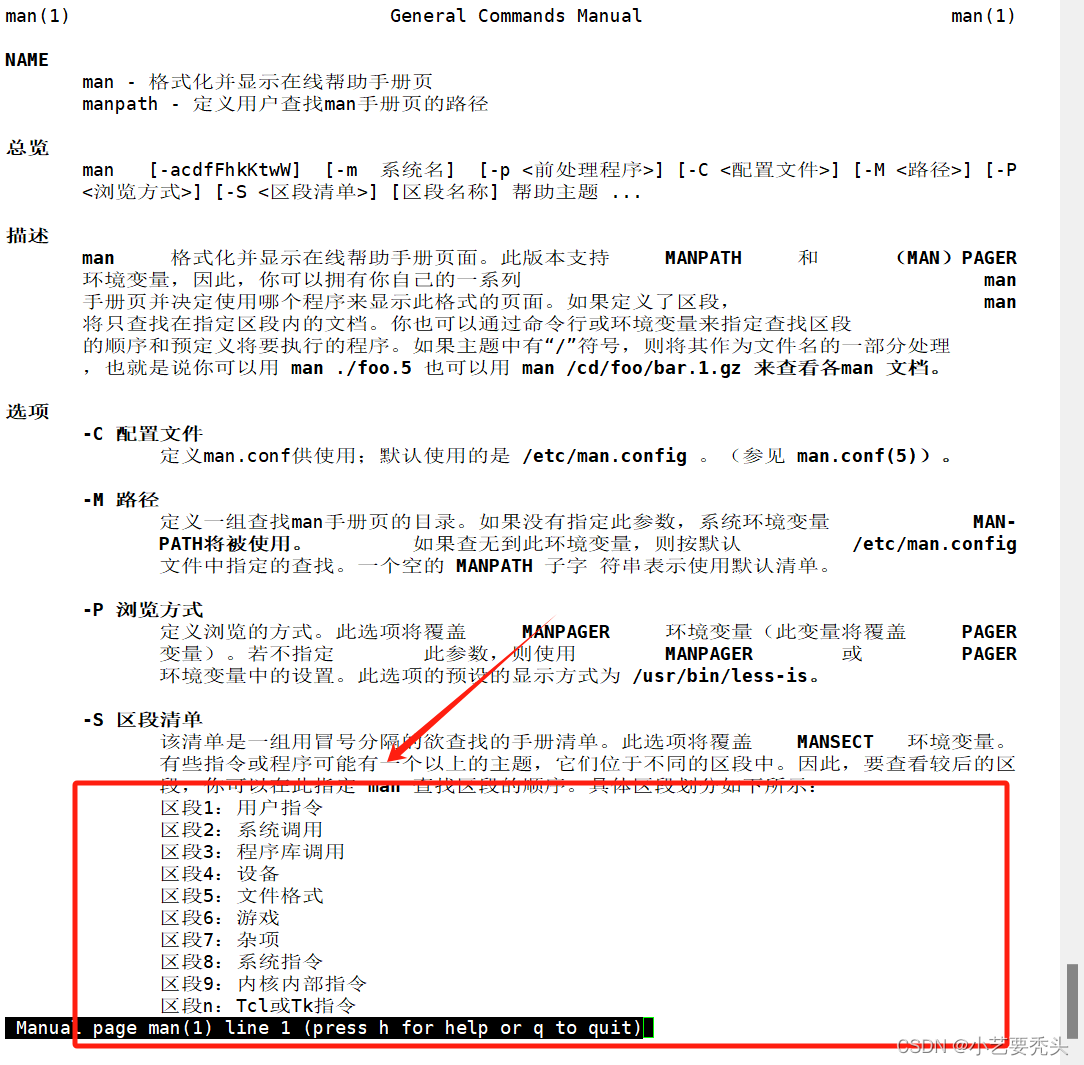

Linux学习(1)——初识Linux

目录

一、Linux的哲学思想

二、Shell

1、Shell的定义

2、Shell的作用 三、Linux命令行

1、Linux通用命令行使用格式 四、Linux命令的分类

1、内部命令和外部命令的理解

2、内部命令和外部命令的区别

3、命令的执行过程

五、编辑Linux命令行

六、获得命令帮助的方法

…

13个数据可视化工具 一键生成可视化图表

前言

数字经济时代,我们每天正在处理海量数据,对数据可视化软件的需求变得突出,它可以帮助人们通过模式、趋势、仪表板、图表等视觉辅助工具理解数据的重要性。

如果遇到数据集需要分析处理,但是你不又知道选择何种数据可视化工…

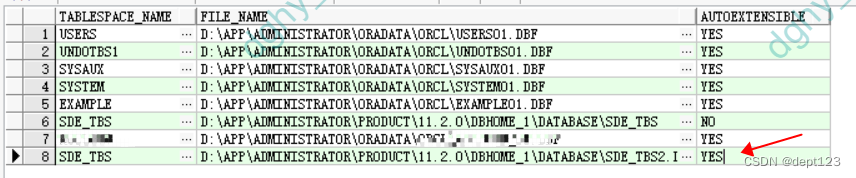

arcmap + oracle11g 迁移数据 报错 copyFeatures失败

原因排查:

1.通过这个界面,我们无法查到真正的原因,

2.将数据拷贝到我们自己的arcmap服务器中,采用 单个要素 导入,从result面板中查找原因; 从上面这个图中,看到关键信息 DBMS error ORA-016…

高精度红蜡3D打印加工服务珠宝首饰3D打印微型医疗器械3D打印-CASAIM

随着科技的飞速发展,3D打印技术已经逐渐渗透到各个领域,成为现代制造业的重要组成部分。而在众多的3D打印材料中,高精度红蜡作为一种具有优异性能的材料,适合对精度要求高的小尺寸模型,用于快速铸造,如珠宝…

Pytorch深度强化学习案例:基于Q-Learning的机器人走迷宫

目录 0 专栏介绍1 Q-Learning算法原理2 强化学习基本框架3 机器人走迷宫算法3.1 迷宫环境3.2 状态、动作和奖励3.3 Q-Learning算法实现3.4 完成训练 4 算法分析4.1 Q-Table4.2 奖励曲线 0 专栏介绍

本专栏重点介绍强化学习技术的数学原理,并且采用Pytorch框架对常见…

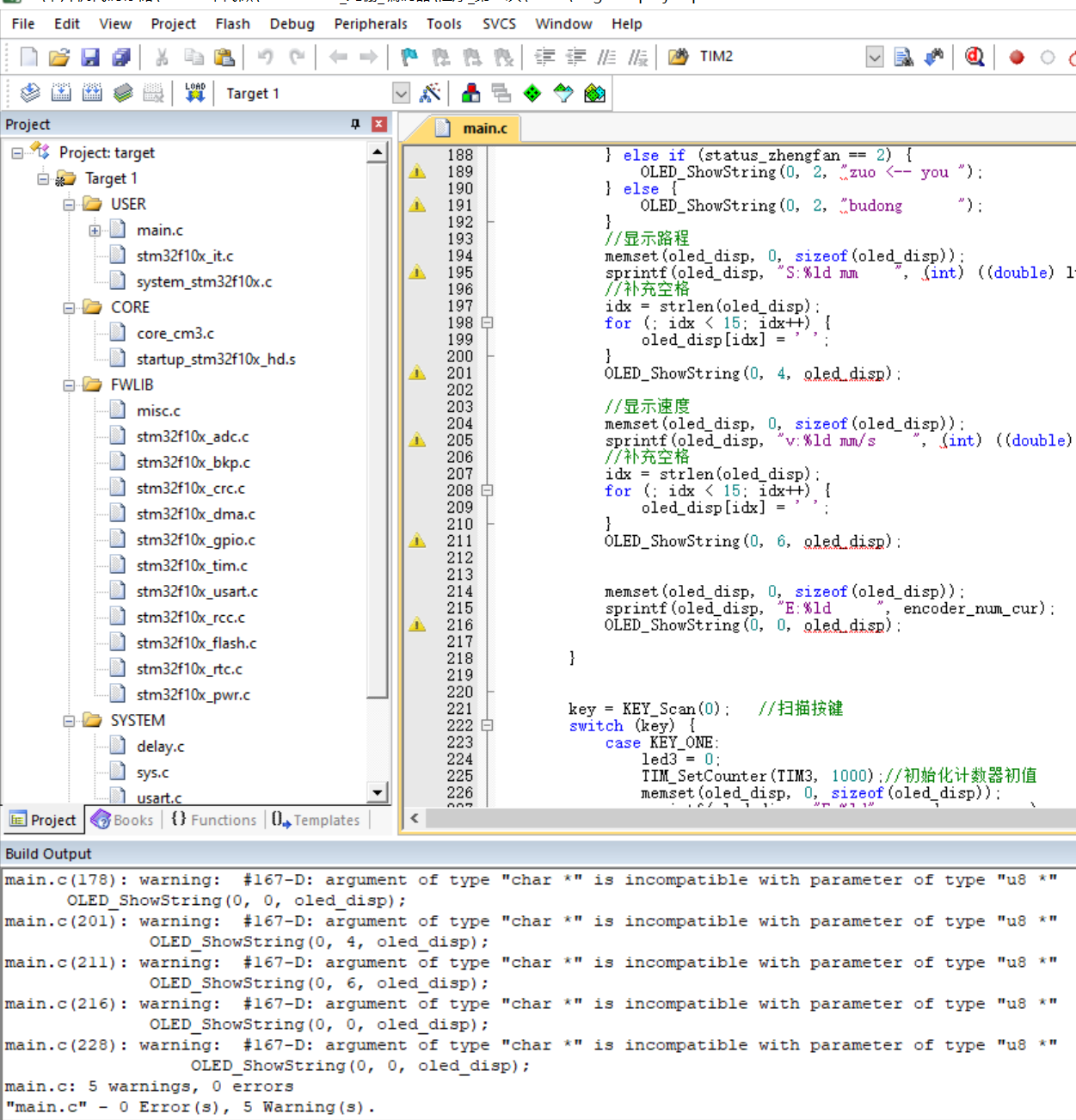

【程序】STM32 读取光栅_编码器_光栅传感器_7针OLED

文章目录 源代码工程编码器基础程序参考资料 源代码工程

源代码工程打开获取:

http://dt2.8tupian.net/2/28880a55b6666.pg3这里做了四倍细分,在屏幕上显示 速度、路程、方向。 接线方法:

单片机--------------串口模块 单片机的5V-------…

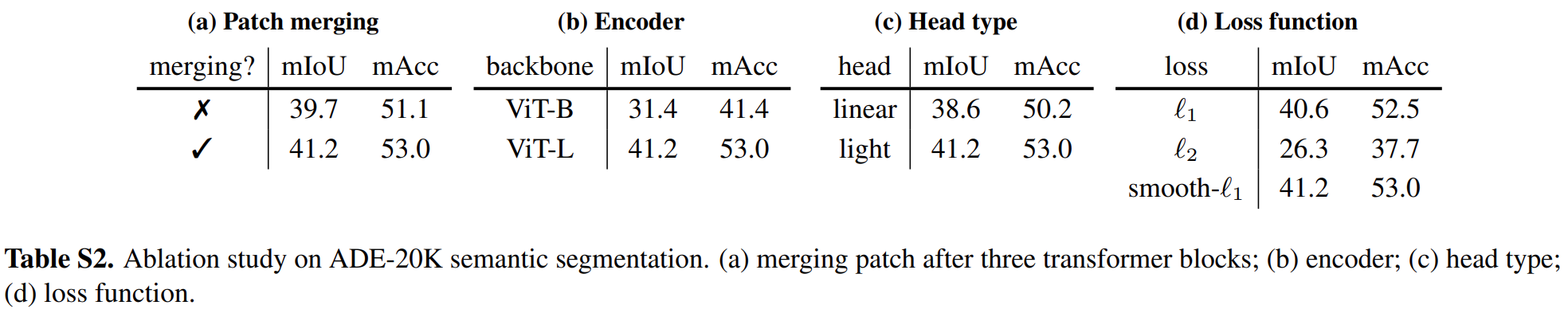

论文阅读——Painter

Images Speak in Images: A Generalist Painter for In-Context Visual Learning

GitHub - baaivision/Painter: Painter & SegGPT Series: Vision Foundation Models from BAAI

可以做什么: 输入和输出都是图片,并且不同人物输出的图片格式相同&a…