本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.ldbm.cn/p/402492.html

如若内容造成侵权/违法违规/事实不符,请联系编程新知网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!相关文章

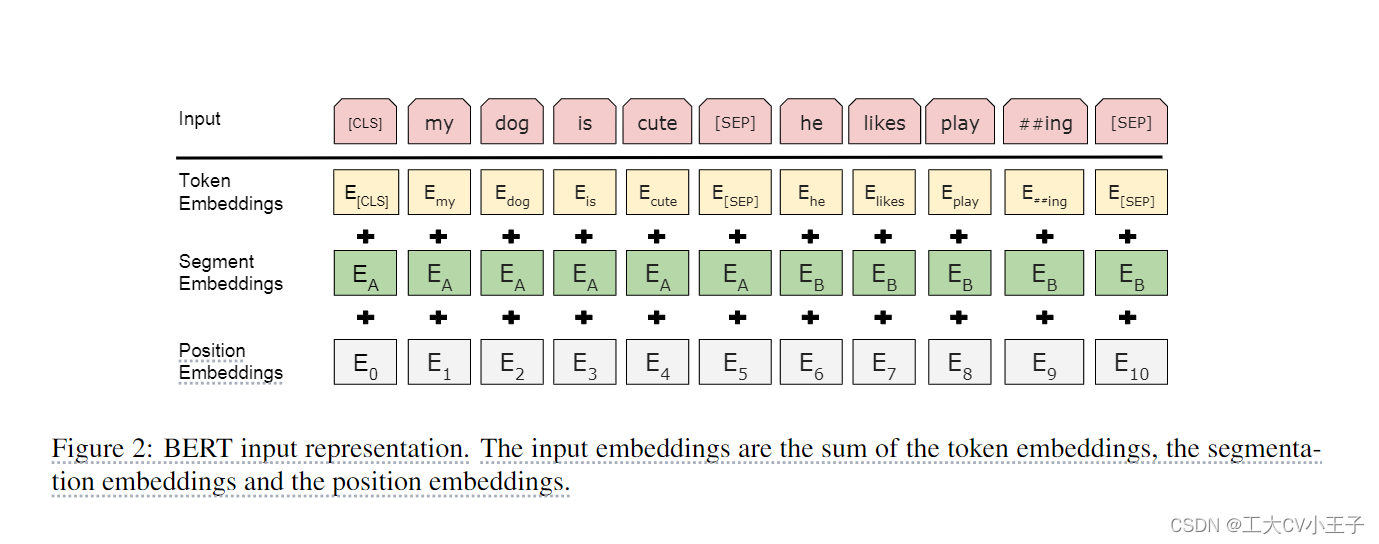

《BERT》论文笔记

原文链接:

[1810.04805] BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding (arxiv.org)

原文笔记:

What:

BETR:Pre-training of Deep Bidirectional Transformers for Language Understand…

数据如何才能供得出、流得动、用得好、还安全

众所周知,数据要素已经列入基本生产要素,同时成立国家数据局进行工作统筹。目前数据要素如何发挥其价值,全国掀起了一浪一浪的热潮。 随着国外大语言模型的袭来,国内在大语言模型领域的应用也大放异彩,与此同时&#x…

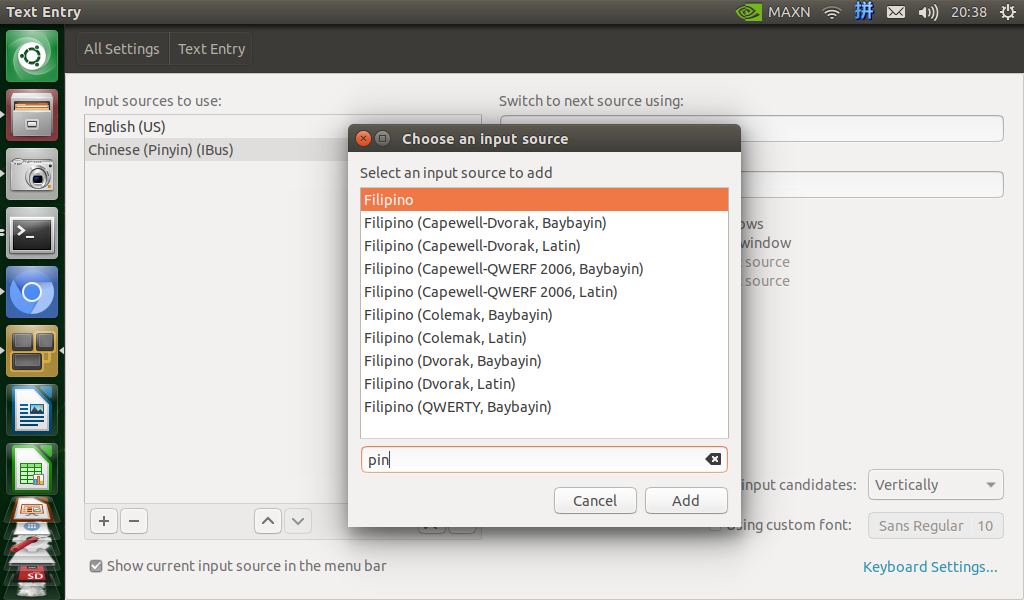

Python3 Ubuntu

一、安装中文输入法

1.sudo apt install ibus-sunpinyin

2.点击右上角输入法,然后点击加号,输入yin添加进来,最后选中输入法即可 二、安装截屏软件

1.sudo apt install gnome-screenshot 三、安装opencv-python

1.pip3 install --upgrade…

解决IDEA下载mysql驱动太慢

下载驱动

下载页 解压后,提取**.jar**文件,放到一个目录下(你自己决定这个目录) 打开IDEA项目,点击右侧的数据库选项卡 在打开的页面,点击号 依次选择:数据源->MySQL 在弹出的页面,依次选择&#…

C++从入门到精通——类的定义及类的访问限定符和封装

类的定义及类的访问限定符和封装 前言一、类的定义类的两种定义方式成员变量命名规则的建议示例 二、类的访问限定符和封装访问限定符访问限定符说明C为什么要出现访问限定符例题 封装例题 前言

类的定义是面向对象编程中的基本概念,它描述了一类具有相同属性和方法…

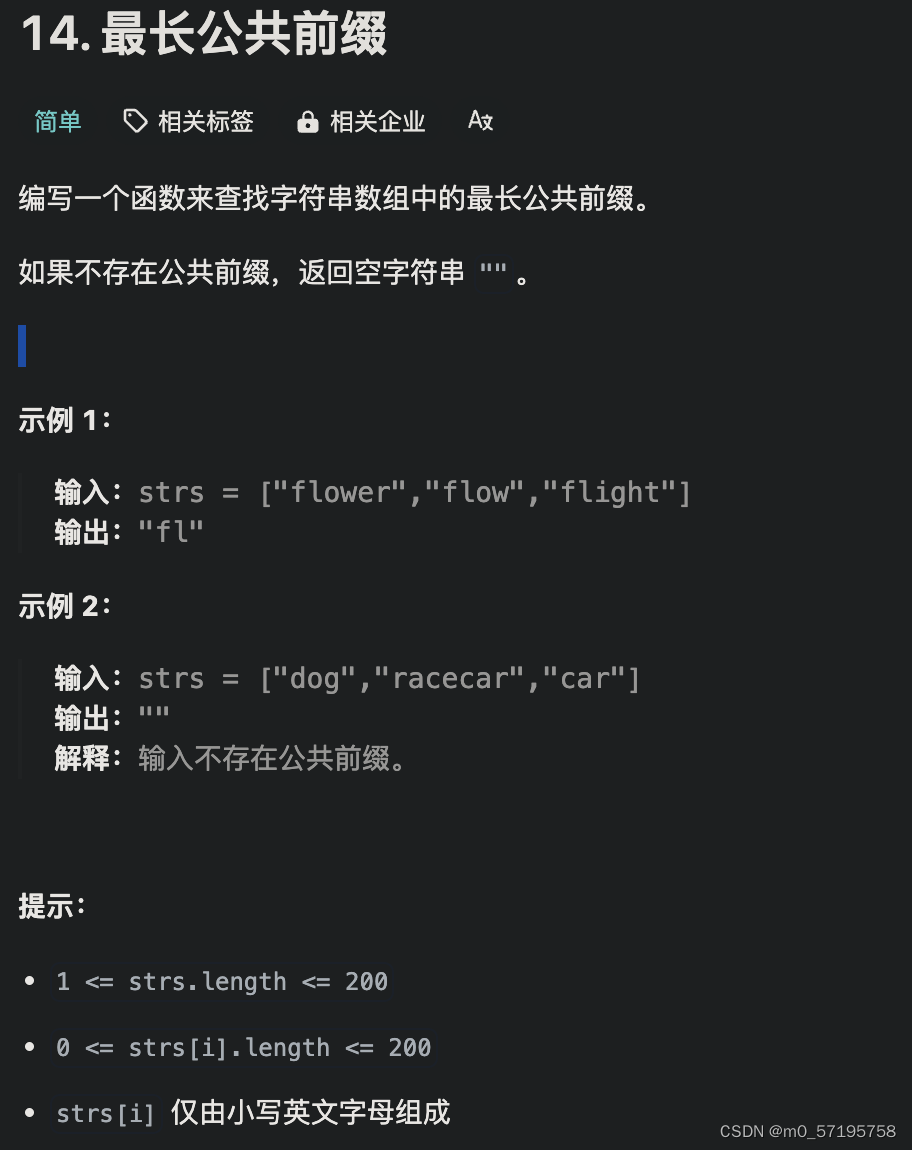

Java | Leetcode Java题解之第14题最长公共前缀

题目: 题解:

class Solution {public String longestCommonPrefix(String[] strs) {if (strs null || strs.length 0) {return "";}int minLength Integer.MAX_VALUE;for (String str : strs) {minLength Math.min(minLength, str.length…

夯实智慧新能源数据底座,TiDB Serverless 在 Sandisolar+ 的应用实践

本文介绍了 SandiSolar通过 TiDB Serverless 构建智慧新能源数据底座的思路与实践。作为一家致力于为全球提供清洁电力解决方案的新能源企业,SandiSolar面临着处理大量实时数据的挑战。为了应对这一问题,SandiSolar选择了 TiDB Serverless 作为他们的数据…

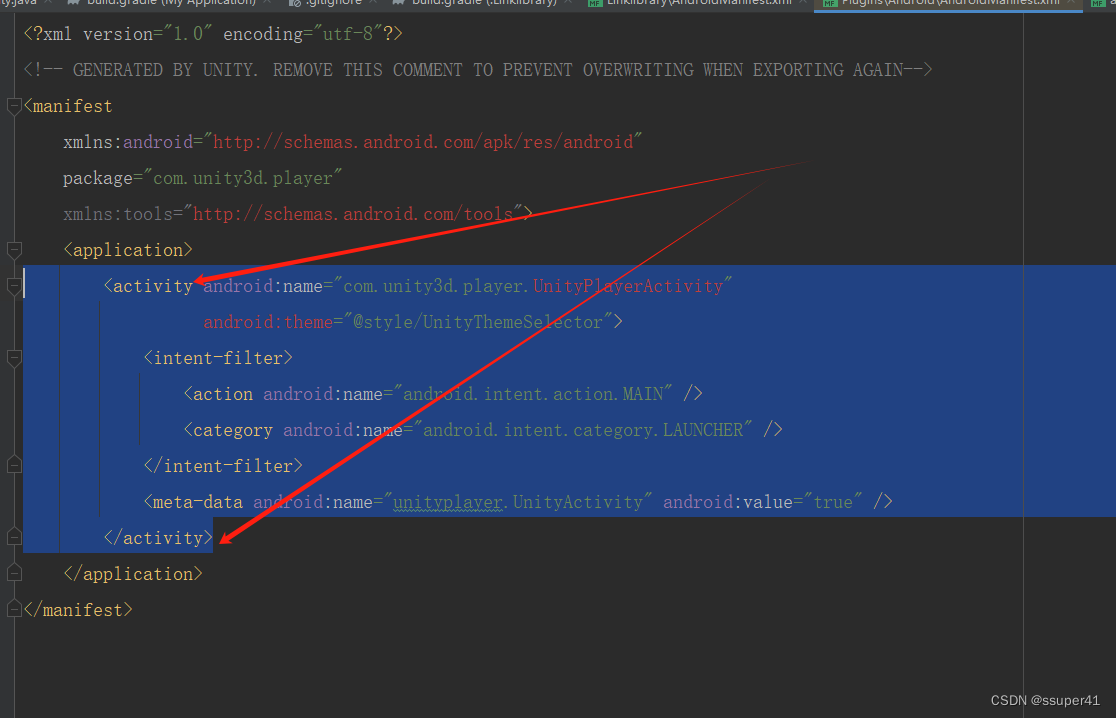

Unity和Android的交互

Unity和Android的交互 一、前言二、Android导出jar/aar包到Unity2.1 版本说明2.2 拷贝Unity的classes.jar给Android工程2.2.1 classes.jar的位置2.2.2 Android Studio创建module2.2.3 拷贝classes.jar 到 Android工程并启用 2.3 编写Android工程代码2.3.1 创建 MainActivity2.…

论文《Structural Information Enhanced Graph Representation for Link Prediction》阅读

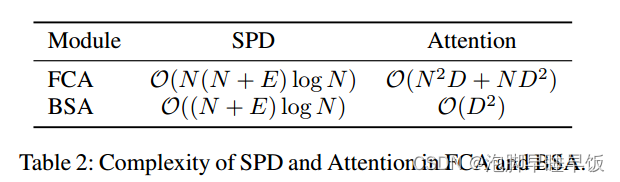

论文《Structural Information Enhanced Graph Representation for Link Prediction》阅读 论文概况Introduction问题一:**现有的节点标记技巧只能帮助感知路径深度,而缺乏对路径“宽度”的感知,例如节点度或路径数量**。问题二:G…

C++模版简单认识与使用

目录

前言:

1.泛型编程

2.函数模版

3.类模版

为什么要有类模版?使用typedef不行吗?

类模版只能显示实例化:

注意类名与类型的区别:

注意类模版最好不要声明和定义分离:

总结: 前言&…

每日五道java面试题之ZooKeeper篇(三)

目录: 第一题. 会话管理第二题. 服务器角色第三题. Zookeeper 下 Server 工作状态第四题. 数据同步第五题. zookeeper 是如何保证事务的顺序一致性的? 第一题. 会话管理

分桶策略:将类似的会话放在同一区块中进行管理,以便于 Zoo…

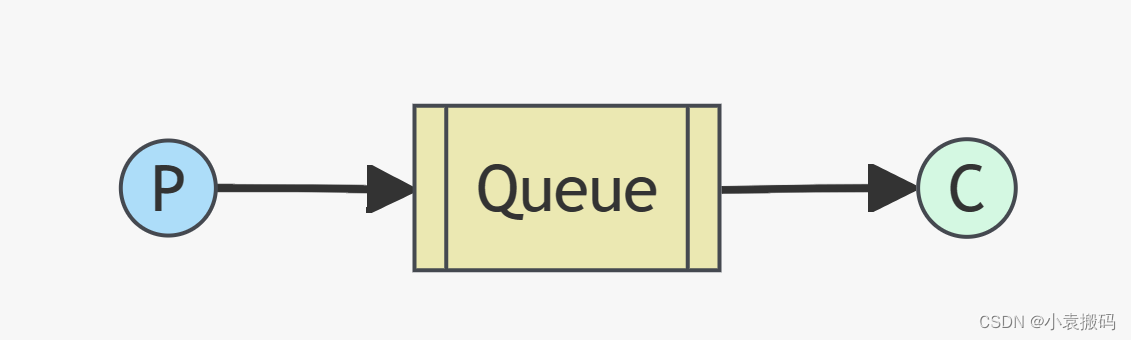

SpringBoot3整合RabbitMQ之二_简单队列模型案例

SpringBoot3整合RabbitMQ之二_简单队列模型案例 文章目录 SpringBoot3整合RabbitMQ之二_简单队列模型案例1. 简单队列模型1. 消息发布者1. 创建简单队列的配置类2. 发布消费Controller 2. 消息消费者3. 输出结果 1. 简单队列模型 简单队列模型就是点对点发布消息,有…

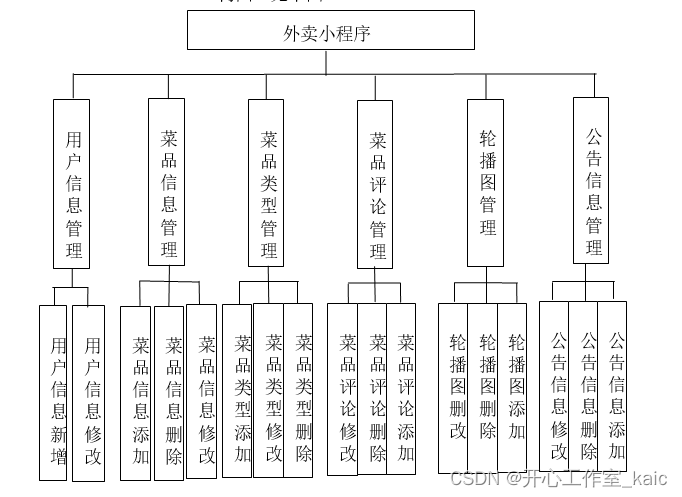

基于微信小程序的外卖管理系统的设计与实现(论文+源码)_kaic

摘 要 互联网发展至今,无论是其理论还是技术都已经成熟,而且它广泛参与在社会中的方方面面。它让信息都可以通过网络传播,搭配信息管理工具可以很好地为人们提供服务。针对高校教师成果信息管理混乱,出错率高,信息安全…

ffmpeg 将多个视频片段合成一个视频

ffmpeg 将多个视频片段合成一个视频 References 网络视频 6 分钟的诅咒。 新建文本文件 filelist.txt

filelist.txtfile output_train_video_0.mp4

file output_train_video_1.mp4

file output_train_video_2.mp4

file output_train_video_3.mp4

file output_train_video_4.m…

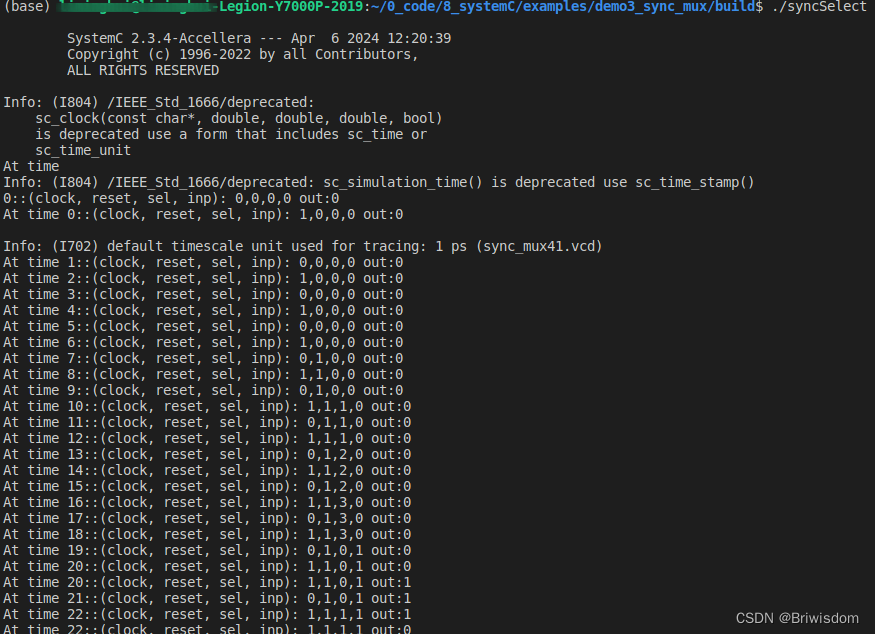

SystemC入门之测试平台编写完整示例:带同步输出的多路选择器

内容:SystemC入门书中的简单测试平台编写示例。

模块文件编写

带锁存输出的4选1多路器模型。输出在信号clock的正跳变沿时刻被锁存。

sync_mux41.h文件

#include <systemc.h>SC_MODULE(sync_mux41)

{sc_in<bool> clock, reset;sc_in<sc_uint<…

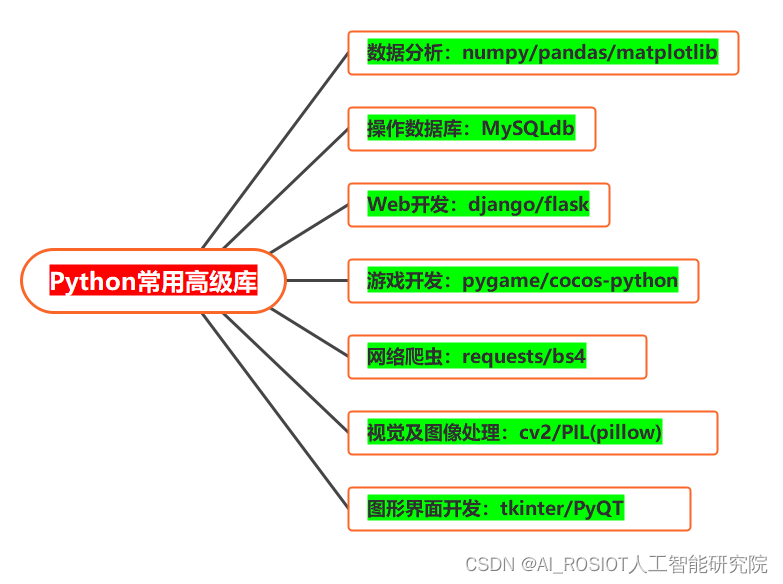

90天玩转Python-02-基础知识篇:初识Python与PyCharm

90天玩转Python系列文章目录

90天玩转Python—01—基础知识篇:C站最全Python标准库总结

90天玩转Python--02--基础知识篇:初识Python与PyCharm

90天玩转Python—03—基础知识篇:Python和PyCharm(语言特点、学习方法、工具安装)

90天玩转Python—04—基础知识篇:Pytho…

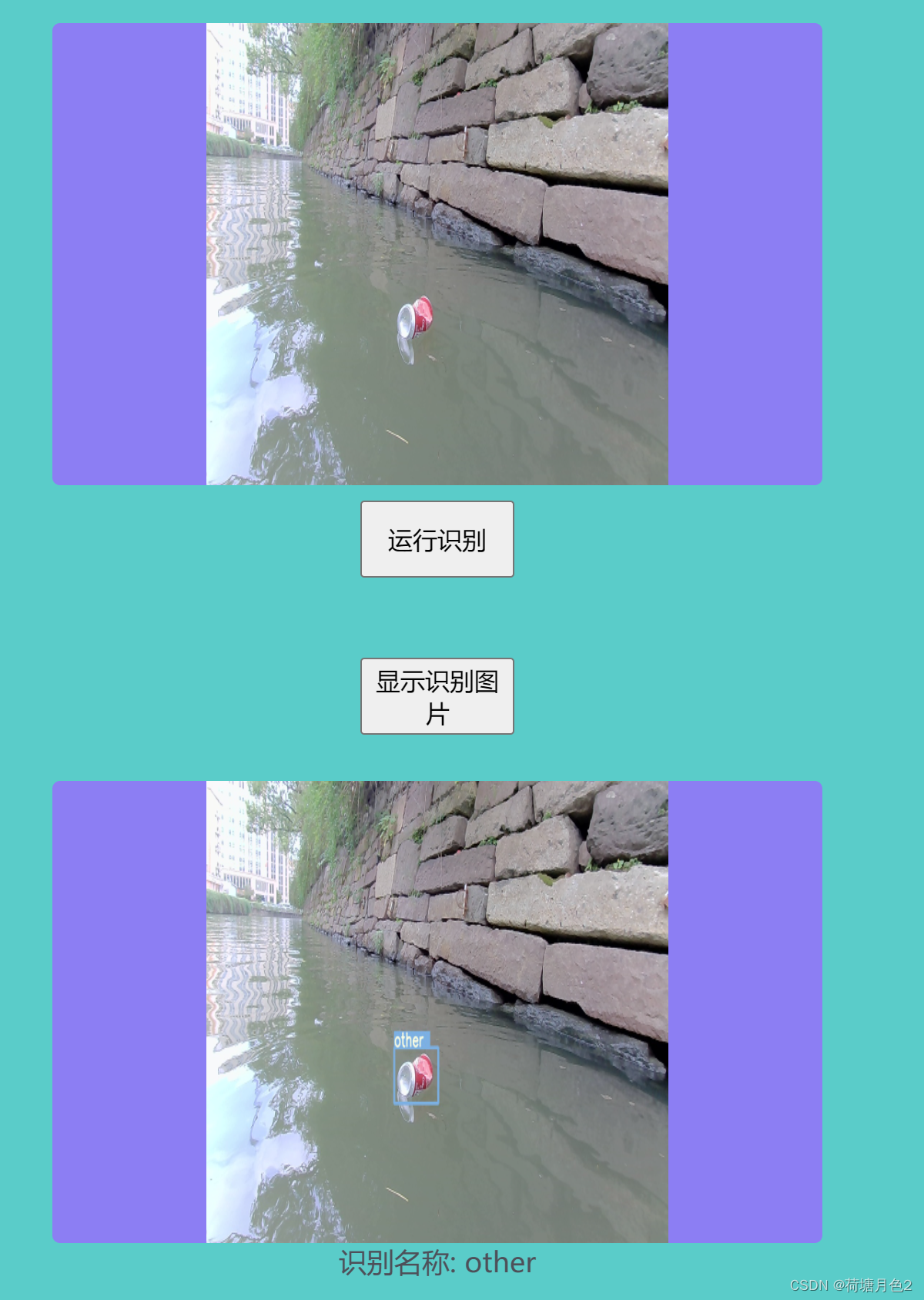

Python+Django+Html河道垃圾识别网页系统

程序示例精选 PythonDjangoHtml河道垃圾识别网页系统 如需安装运行环境或远程调试,见文章底部个人QQ名片,由专业技术人员远程协助! 前言

这篇博客针对《PythonDjangoHtml河道垃圾识别网页系统》编写代码,代码整洁,规…

HTML5.Canvas简介

1. Canvas.getContext

getContext(“2d”)是Canvas元素的方法,用于获取一个用于绘制2D图形的绘图上下文对象。在给定的代码中,首先通过getElementById方法获取id为"myCanvas"的Canvas元素,然后使用getContext(“2d”)方法获取该Ca…

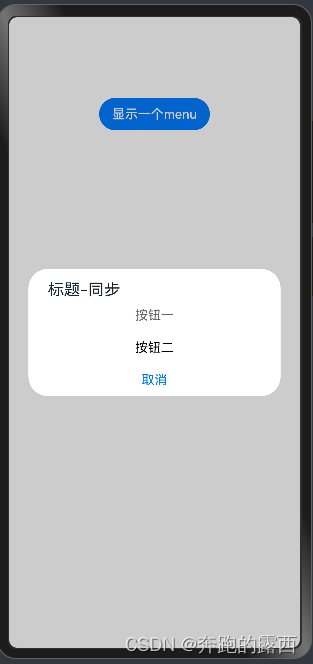

【鸿蒙 HarmonyOS】@ohos.promptAction (弹窗)

一、背景

创建并显示文本提示框、对话框和操作菜单。

文档地址👉:文档中心 说明 本模块首批接口从API version 9开始支持。后续版本的新增接口,采用上角标单独标记接口的起始版本。 该模块不支持在UIAbility的文件声明处使用,即…

python标准数据类型--集合常用方法

在Python中,集合(Set)是一种无序且不重复的数据结构,它是由一个无序的、不重复的元素组成的。Python中的集合与数学中的集合概念相似,并且支持一系列常用的方法。本篇博客将深入介绍Python集合的常用方法,帮…