本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。如若转载,请注明出处:http://www.ldbm.cn/p/5456.html

如若内容造成侵权/违法违规/事实不符,请联系编程新知网进行投诉反馈email:809451989@qq.com,一经查实,立即删除!相关文章

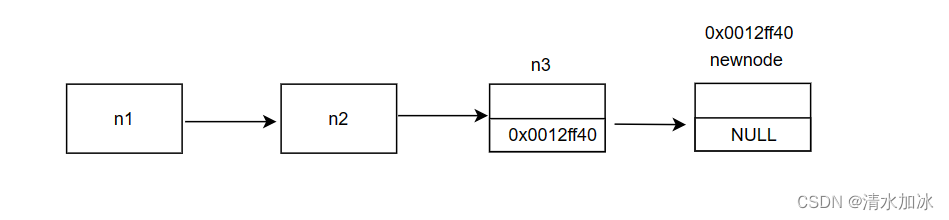

数据结构入门指南:链表(新手避坑指南)

目录 前言

1.链表

1.1链表的概念 1.2链表的分类

1.2.1单向或双向

1.2.2.带头或者不带头

1.2.33. 循环或者非循环

1.3链表的实现 定义链表

总结 前言 前边我们学习了顺序表,顺序表是数据结构中最简单的一种线性数据结构,今天我们来学习链表&#x…

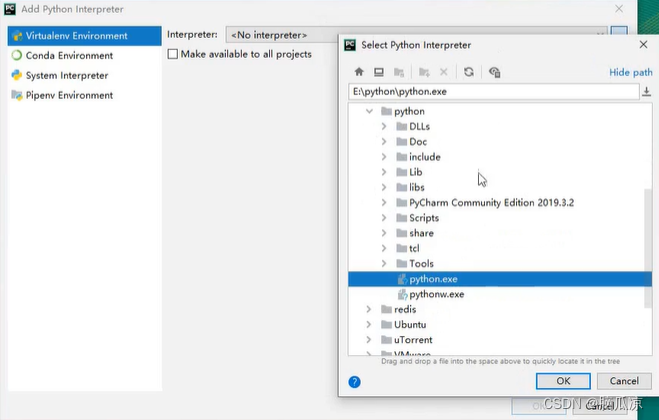

爬虫003_pycharm的安装以及使用_以及python脚本模版设置---python工作笔记021

这里我们用ide,pycharm来编码,看一看如何下载 这里我们下载这个社区办,这个是免费的,个人版是收费的 然后勾选以后 安装以后我们来创建一个项目 这里可以选择python的解释器,选择右边的... 这里我们找到我们自己安装的python解释器

python中等号要空格吗,python中等号和双等号

这篇文章主要介绍了python中等号两边自动添加空格操作,具有一定借鉴价值,需要的朋友可以参考下。希望大家阅读完这篇文章后大有收获,下面让小编带着大家一起了解一下。 学习python时,注释的时候有下划线,波浪线&#x…

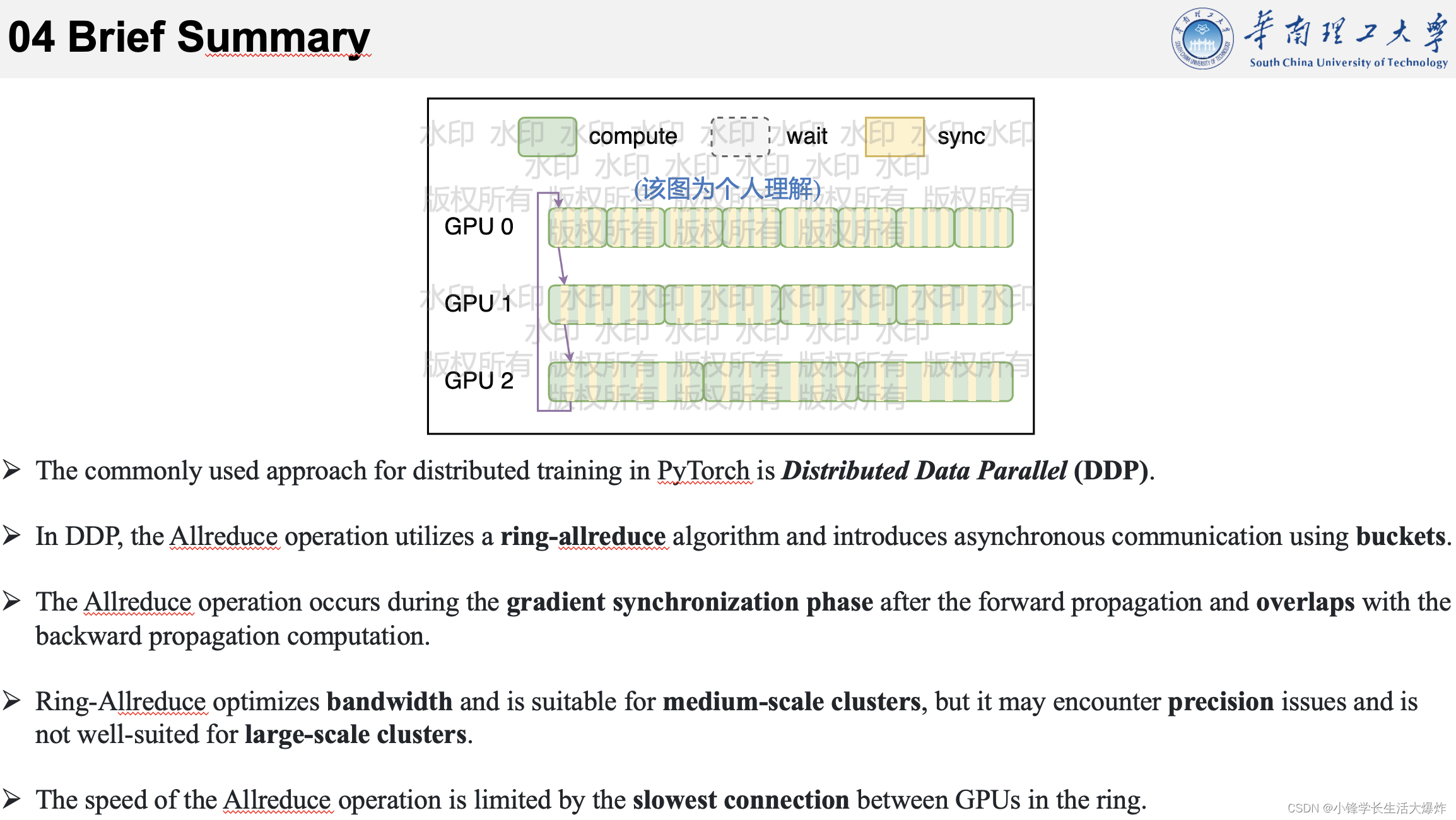

【笔记】PyTorch DDP 与 Ring-AllReduce

转载请注明出处:小锋学长生活大爆炸[xfxuezhang.cn] 文内若有错误,欢迎指出! 今天我想跟大家分享的是一篇虽然有点老,但是很经典的文章,这是一个在分布式训练中会用到的一项技术, 实际上叫ringallreduce。 …

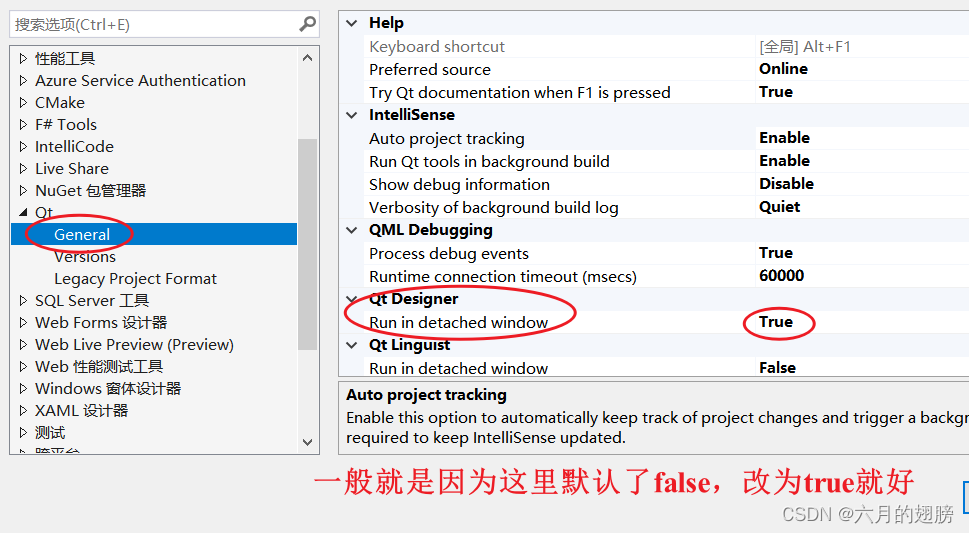

VS中使用QT的插件:QT VS Tools

1、插件下载

(1)可以在VS中的管理扩展中直接搜索安装,但是我下载太慢,甚至是根本就无法安装。

(2)qt插件下载地址:Index of /official_releases/vsaddin

这个地址下载就很快,下载…

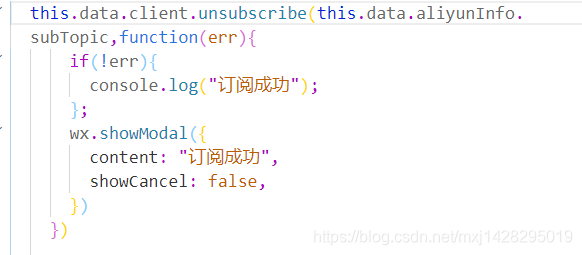

【物联网】微信小程序接入阿里云物联网平台

微信小程序接入阿里云物联网平台

一 阿里云平台端

1.登录阿里云 阿里云物联网平台 点击进入公共实例,之前没有的点进去申请 2.点击产品,创建产品 3.产品名称自定义,按项目选择类型,节点类型选择之恋设备,联网方式W…

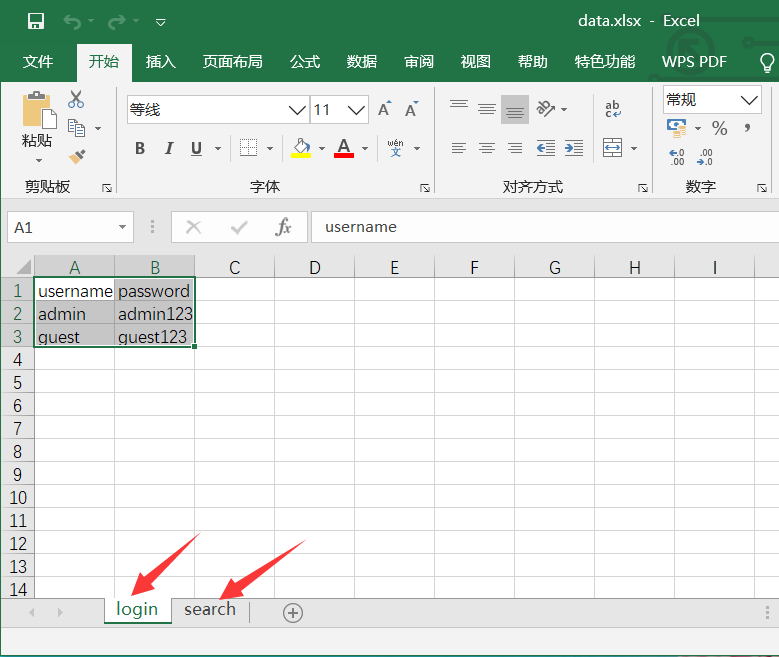

seldom之数据驱动

seldom之数据驱动

如果自动化某个功能,测试数据不一样而操作步骤是一样的,那么就可以使用参数化来节省测试代码。

seldom是我在维护一个Web UI自动化测试框,这里跟大家分享seldom参数化的实现。

GitHub:GitHub - SeldomQA/seld…

整数拆分——力扣343

文章目录 题目描述法一 动态规划法二 动态规划优化法三 数学 题目描述 法一 动态规划 int integerBreak(int n) {vector<int> dp(n1);for(int i2;i<n;i){int curMax 0;for(int j1;j<i;j){curMax max(curMax, max(j*(i-j), j*dp[i-j]));}dp[i] curMax;} return d…

【小白篇】Vscode配置Python和C++环境

文章目录 一、配置python环境二、配置C环境2.1 安装MinGW编译器2.2 安装C/C扩展2.3 配置C/C环境(1)配置编译器(2)配置构建任务(3)配置调试设置 2.4 测试例子2.5 注意事项 三、插件Reference 一、配置python…

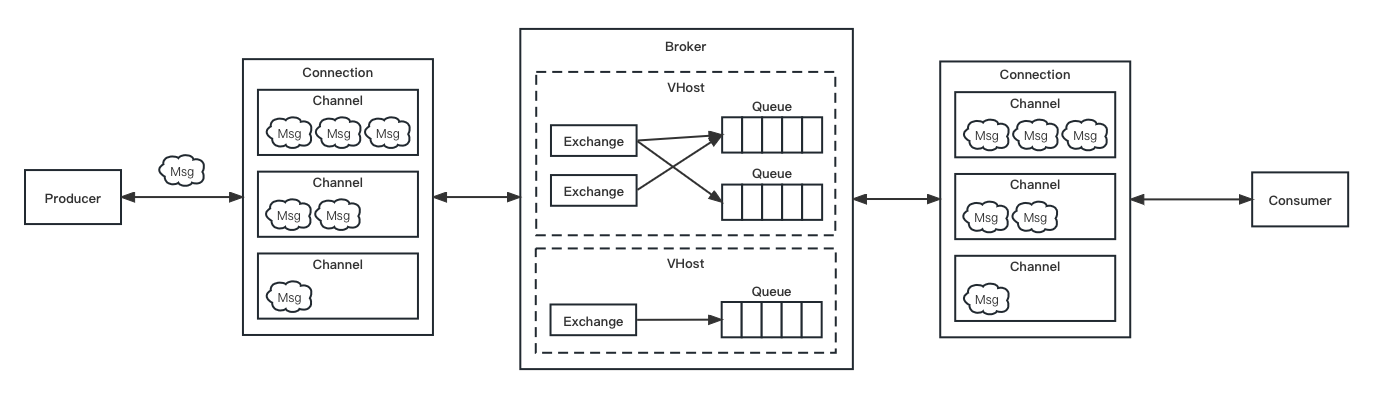

RabbitMQ 教程 | 第2章 RabbitMQ 入门

👨🏻💻 热爱摄影的程序员 👨🏻🎨 喜欢编码的设计师 🧕🏻 擅长设计的剪辑师 🧑🏻🏫 一位高冷无情的编码爱好者 大家好,我是 DevO…

unity行为决策树实战详解

一、行为决策树的概念

行为决策树是一种用于游戏AI的决策模型,它将游戏AI的行为分解为一系列的决策节点,并通过节点之间的连接关系来描述游戏AI的行为逻辑。在行为决策树中,每个节点都代表一个行为或决策,例如移动、攻击、逃跑等…

今年嵌入式行情怎么样?

我不了解其它行业可能描述有些片面,但总的来说,我对嵌入式是很看好的,因为你可以感受到你能实际的做出产品而不是类似前端和互联网只是数字数据。

并且嵌入式的学习过程充满乐趣,你可以接触到从沙子到开关管到逻辑门到芯片架构到…

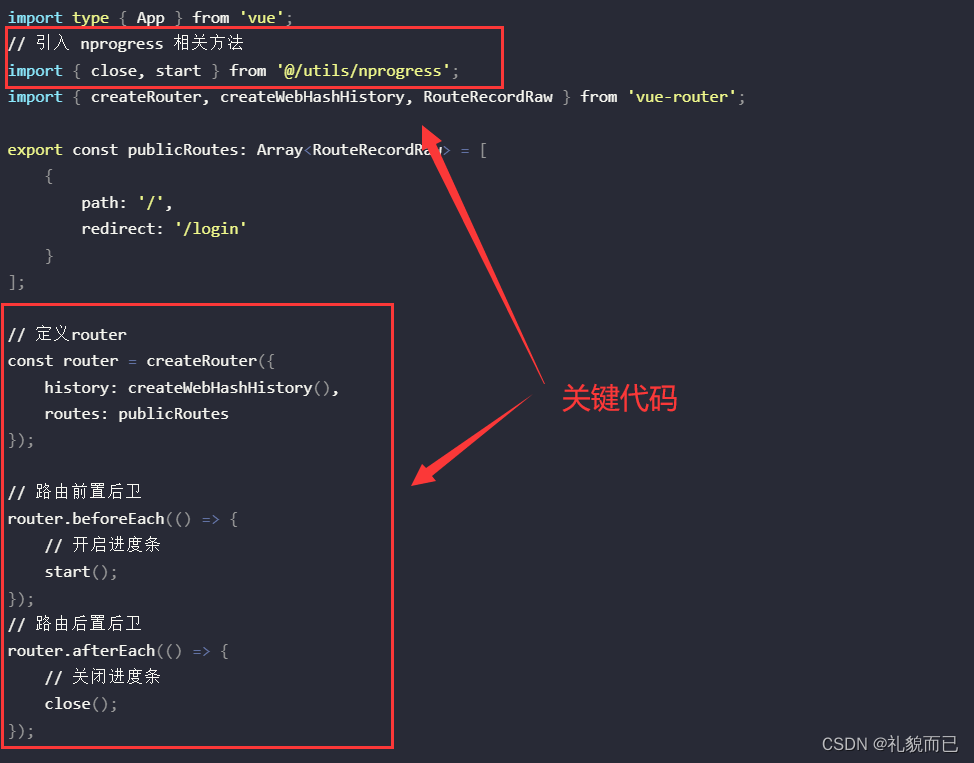

【Vue3+Ts+Vite】配置nprogress,实现路由加载进度条

文章目录 一、先看效果二、安装 nprogress三、封装 utils3.1 在 src 下新建 utils 文件夹,在 utils 文件夹下新建 nprogress.ts 文件3.2 在 nprogress.ts 文件中写入如下代码 四、结合路由守卫使用4.1 /src/router/index.ts 中的全量代码4.2 代码说明 五、相关文章友…

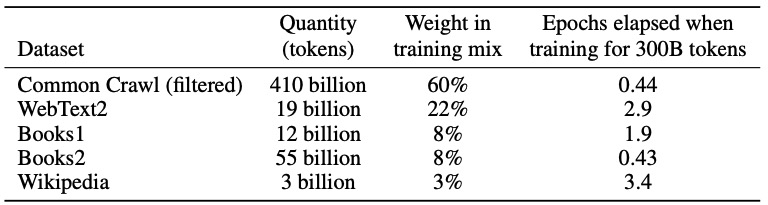

【深度学习】GPT-3

2020年5月,OpenAI在长达72页的论文《https://arxiv.org/pdf/2005.14165Language Models are Few-Shot Learners》中发布了GPT-3,共有1750亿参数量,需要700G的硬盘存储,(GPT-2有15亿个参数),它比GPT-2有了极大的改进。根…

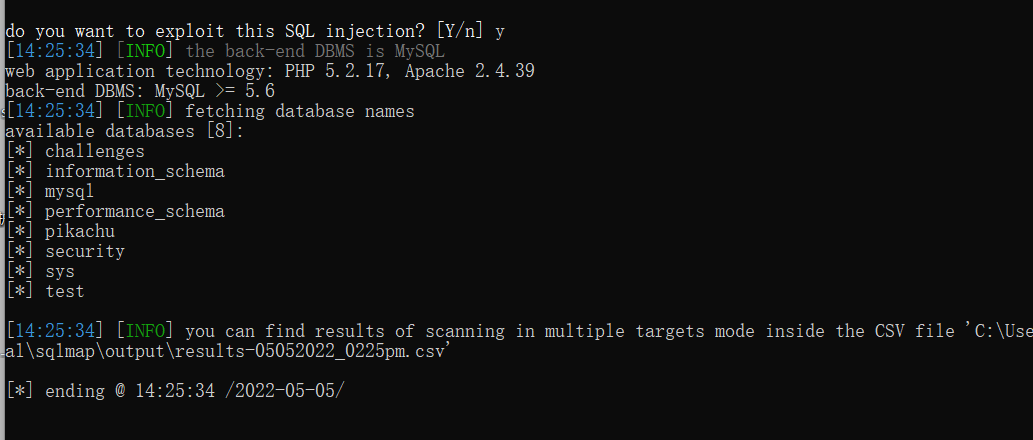

SQL注入之sqlmap

SQL注入之sqlmap

6.1 SQL注入之sqlmap安装

sqlmap简介:

sqlmap是一个自动化的SQL注入工具,其主要功能是扫描,发现并利用给定的URL的SQL注入漏洞,目前支持的数据库是MS-SQL,MYSQL,ORACLE和POSTGRESQL。SQLMAP采用四种独特的SQL注…

【云原生】Kubernetes中deployment是什么?

目录

Deployments

更新 Deployment

回滚 Deployment

缩放 Deployment

Deployment 状态

清理策略

金丝雀部署

编写 Deployment 规约 Deployments

一个 Deployment 为 Pod 和 ReplicaSet 提供声明式的更新能力。

你负责描述 Deployment 中的 目标状态,而 De…

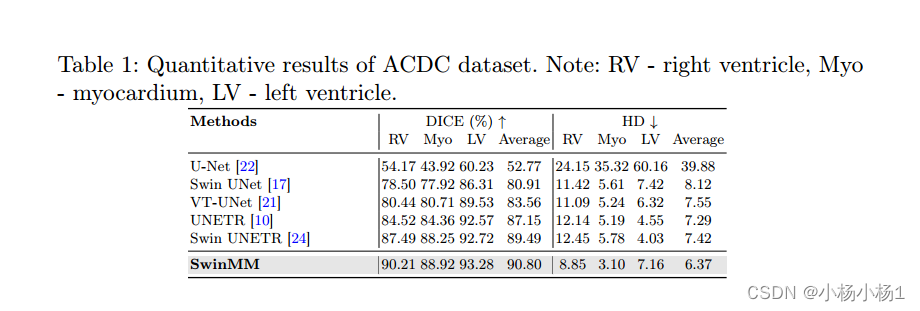

2023.8.1号论文阅读

文章目录 MCPA: Multi-scale Cross Perceptron Attention Network for 2D Medical Image Segmentation摘要本文方法实验结果 SwinMM: Masked Multi-view with SwinTransformers for 3D Medical Image Segmentation摘要本文方法实验结果 MCPA: Multi-scale Cross Perceptron Att…

PKG内容查看工具:Suspicious Package for Mac安装教程

Suspicious Package Mac版是一款Mac平台上的查看 PKG 程序包内信息的应用,Suspicious Package Mac版支持查看全部包内全部文件,比如需要运行的脚本,开发者,来源等等。

suspicious package mac使用简单,只需在选择pkg安…

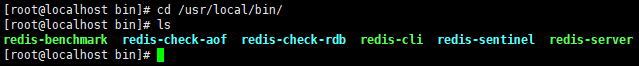

Redis的安装部署以及基本的使用

目录

一、Linux下直接安装Redis

(1)下载Redis安装包

(2)安装GCC编译环境

(3)安装Redis

(4)服务启动

(5)后台启动

二、使用Docker安装部署Redis

&…